Prompt Testing & Evaluations for Modern Dev Teams

Empower every team member—from product managers and domain experts to software developers and AI engineers—to build, evaluate, and ship LLM-powered workflows, agents, and function-calling integrations.

Testing and Evaluation Capabilities

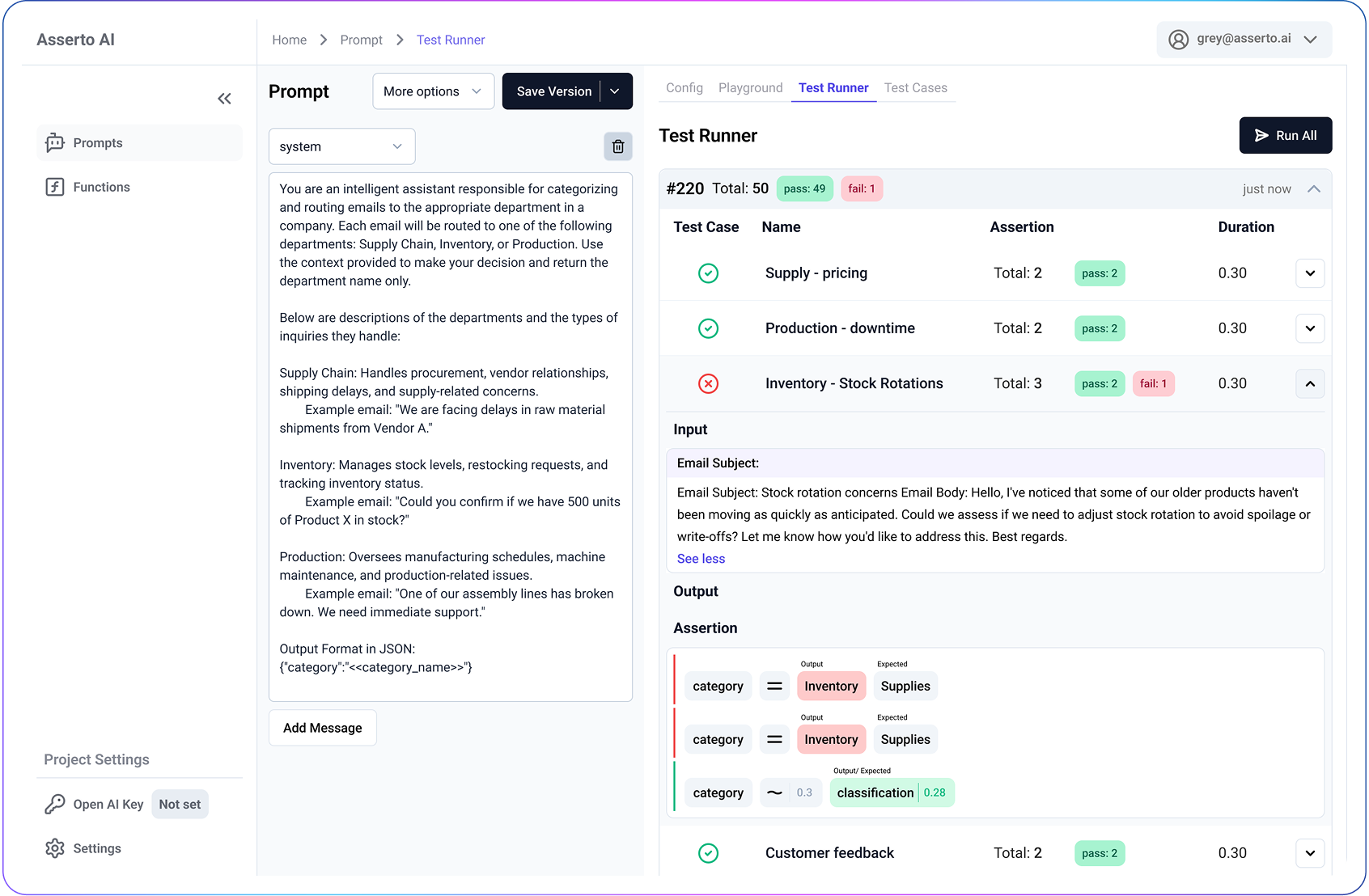

Structured Data Extraction

Extract nested JSON keys and values using JSONPath.

Extract data from XML-style tags (e.g., <tag>value</tag>) to target specific response segments for validation.

Rich Metrics/Assertions Operations

Exact equality, fuzzy similarity, LLM-as-judge semantic checks, or custom logic.

Apply assertions to entire JSON outputs or individual elements.

Rich UI Test Designer

Define and manage data-driven test cases via intuitive no-code interface.

Select individual tests or entire suites and run across models and versions.

Rapid Iteration & Fast Turnaround

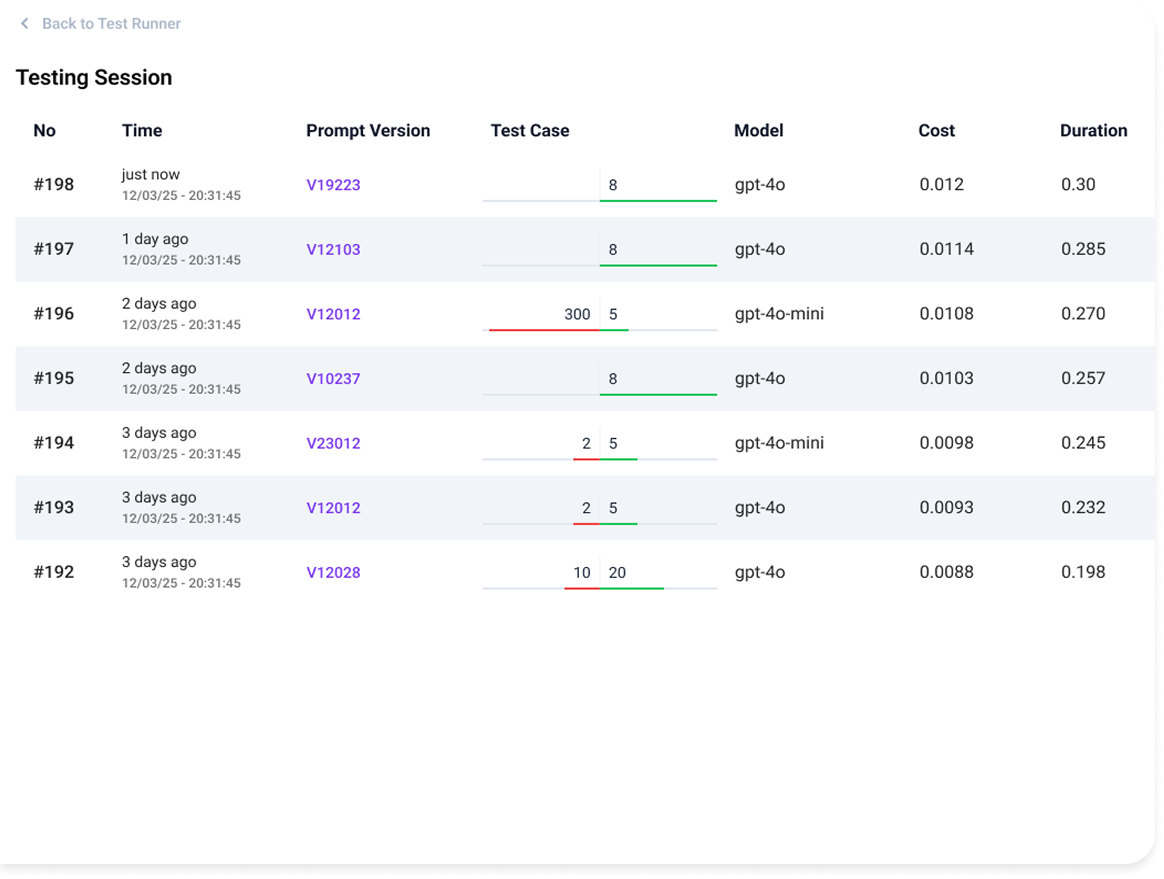

Visualize results instantly, compare outputs, and update prompts on the fly.

Empower the full team with quick feedback loops and version traceability.

Tailored Tools for Every Role

Whether you're a developer, tester, or product owner, Asserto AI provides tailored tools to help you evaluate prompts effectively.

Product Managers & Domain Experts

Build and manage test cases with intuitive UI controls—no code needed.

Validate prompts against real-world business logic and function-calling schemas.

Software & System Developers

Leverage assertion-driven validations to ensure reliable JSON payloads for system integration.

Decouple prompt logic from application code with CMS-style integrations—update prompts dynamically without redeploying services.

AI Engineers & Prompt Optimization Experts

Compare prompt variations across models using pass/fail ratios, similarity scores, and cost metrics.

Analyze historical trends to refine prompts for accuracy, speed, and budget efficiency.